| Item/Cluster | Zephyr | Phoenix |

| Nodes | 92 | 128 |

| Processors per node | 2 | 2 |

| Total Processors | 184 | 256 |

| Processor Type | AMD 6273, 16 core, 2.3 GHz | AMD 2378, quad core, 2.4 GHz |

| Cores Per Processor | 16 (8 modules each capable of executing 2 integer or 1 floating point operation) | 4 cores each capable of executing 1 integer or 1 floating point operation |

| Total Cores | 2944 | 1024 |

| Ram per node | 88 nodes at 32 GB, 2 nodes at 64 GB, & 2 nodes at 128 GB | 124 nodes at 8 GB and 4 nodes at 32 GB |

| RAM Speed | 1600 MHz | 667 MHz |

| Disk Storage per Node | One TB | 200 GB |

| Login Nodes | 2 | 3 |

| Administrative Nodes | 2 | 2 |

| Application Nodes (Sandbox) | 1 | 0 |

| Statistics Gathering Nodes | 1 | 1 |

| I/O Nodes | 1 | 4 |

| Ethernet Interconnect | Gig-E/ Dual 10 Gig Uplink | Gig-E/ Dual 10 Gig Uplink |

| Infiniband Interconnect | QDR 40 Gbps | DDR 20 Gbps |

| File System Usable Storage | Lustre-based, 120 TB/RAID6 Storage | GPFS-based,90 TB |

| Operating System | CentOS/Linux 6.2 | Red HatEnterprise/Linus 4.8 |

| Tape Storage | Shared 160 TB Tape Library | |

Network Access

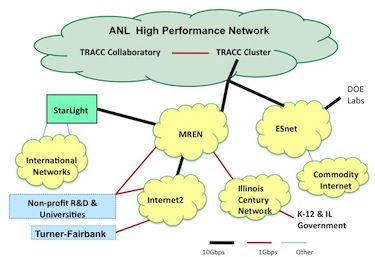

The TRACC cluster is served by high-bandwidth networks with connectivity to the main Argonne National Laboratory network. As part of the Argonne network, TRACC's networks are connected to the national and international research and education network infrastructure, and are protected by Argonne's cyber-security team.

Applications Software

In establishing TRACC, the U.S. Department of Transportation identified several national high-priority transportation research issues. The key applications identified by USDOT require the availability of software capabilities in the areas of traffic simulation, computational structural mechanics, and computational fluid dynamics.

As a result, specific engineering analysis software has been installed on TRACC´s massively parallel computer system for use by the USDOT research community:

- The USDOT-developed software system, TRANSIMS , is being used for traffic modeling, including such applications as traffic simulation in metropolitan areas , evacuation planning and evaluation, and long-range regional planning.

- The commercial codes LS-DYNA® and LS-OPT® are being used for computational structural mechanics applications, such as bridge stability and dynamics analysis, occupant injury assessment, crash worthiness, roadside hardware evaluation, roadside material modeling and performance of pavement structures.

- The commercial codes STAR-CD® and STAR-CCM+ are being used at TRACC for computational fluid dynamics in applications such as bridge hydraulics analysis, flow-induced vibration of bridge components, and vehicle aerodynamics.

- The GNU, Intel, and PathScale compilers and the Intel MPI library are available for users who wish to run their own software on the cluster.

- NoMachine NX is provided for secure remote desktop virtualization, enabling users to run graphical and visualization software on the cluster.